Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

Which box will Maxi look in?

Mindreading

Not Mindreading

Maxi wants his chocolate.

Chocolate is good.

Maxi believes his chocolate is in the blue box.

Maxi’s chocolate is in the red box.

Therefore:

Therefore:

Maxi will look in the blue box.

Maxi will look in the red box.

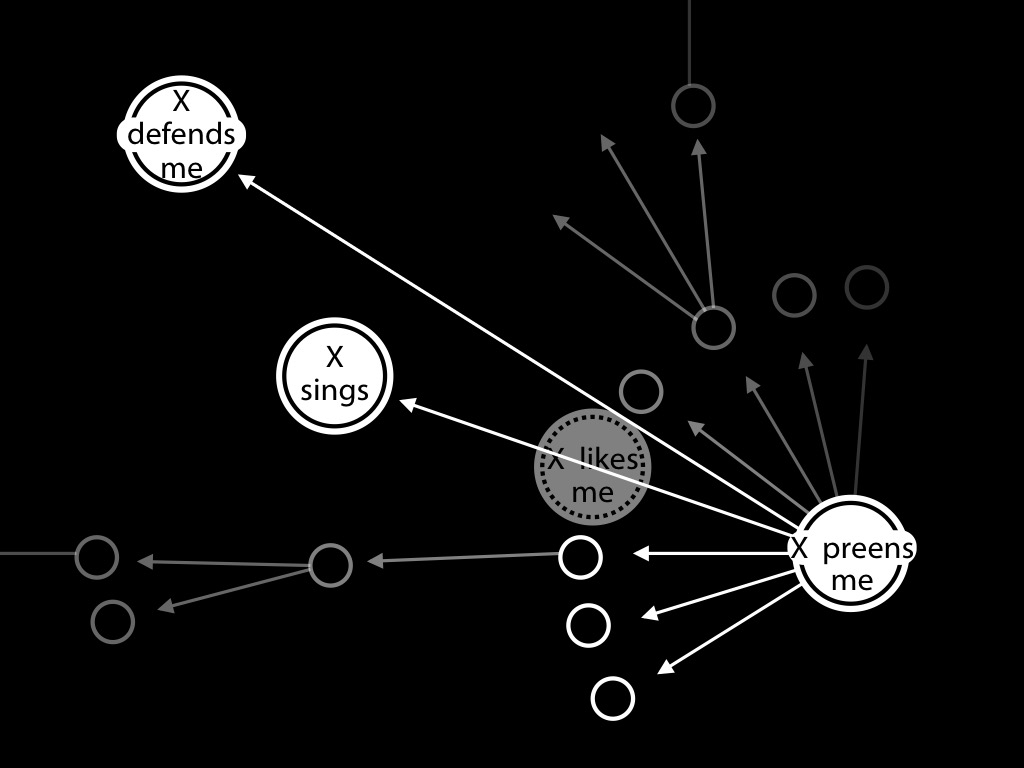

Krupenye et al, 2016

So far:

1. mindreading defined [preliminary]

2. how action predictions can indicate mindreading

3. a nonverbal mindreading test: how anticipatory looking can indicate action prediction

Some Evidence

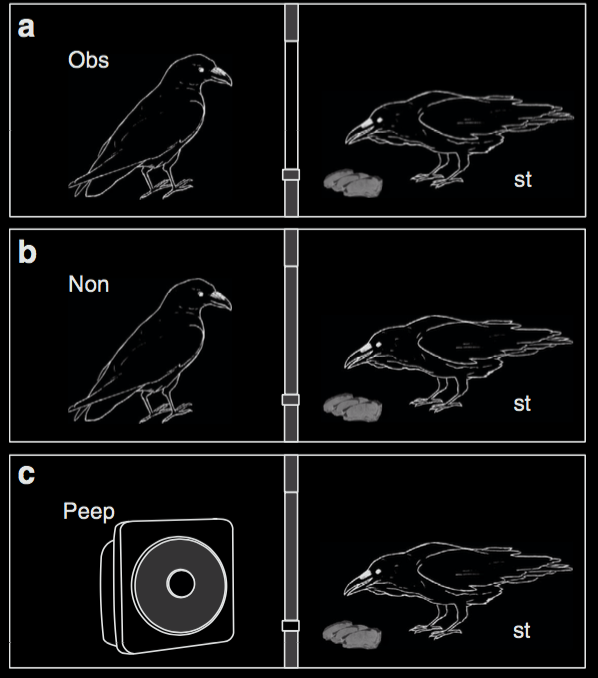

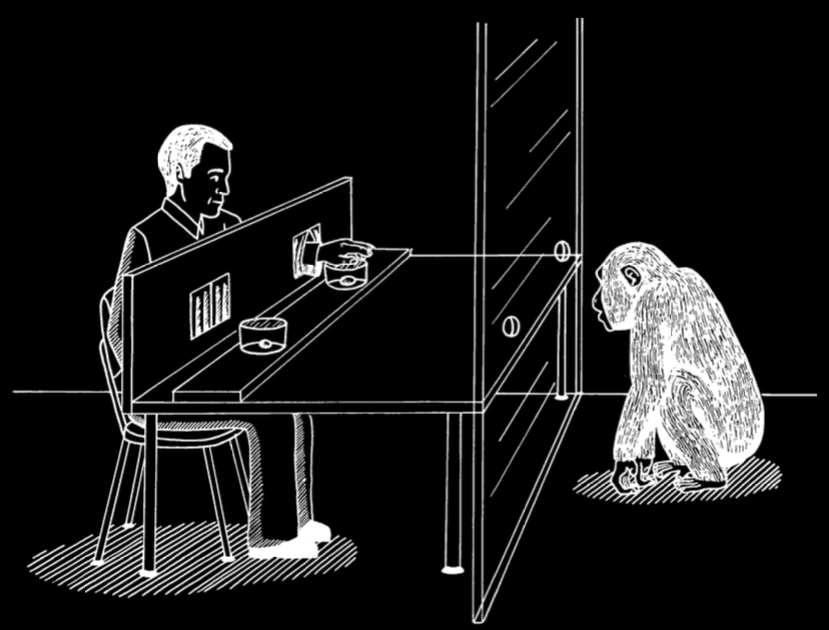

Hare et al (2001, figure 1)

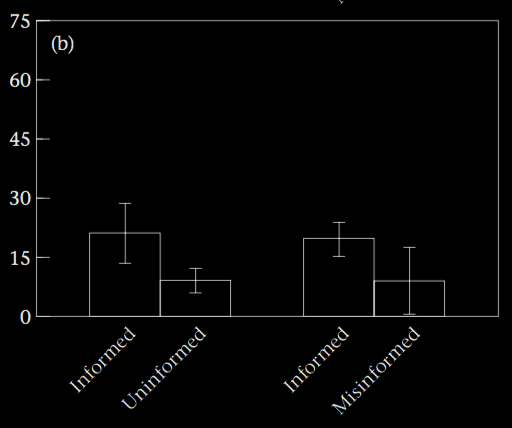

Hare et al (2001, figure 2b)

‘subordinate subjects retrieved a significantly larger percentage of food when dominants lacked accurate information about the location of food

‘(Wilcoxon test: Uninformed versus Control Uninformed: T=36, N=8, P<0.01; Misinformed versus Control Misinformed: T=36, N=8, P<0.01)’

Hare et al (2001, p. 143)

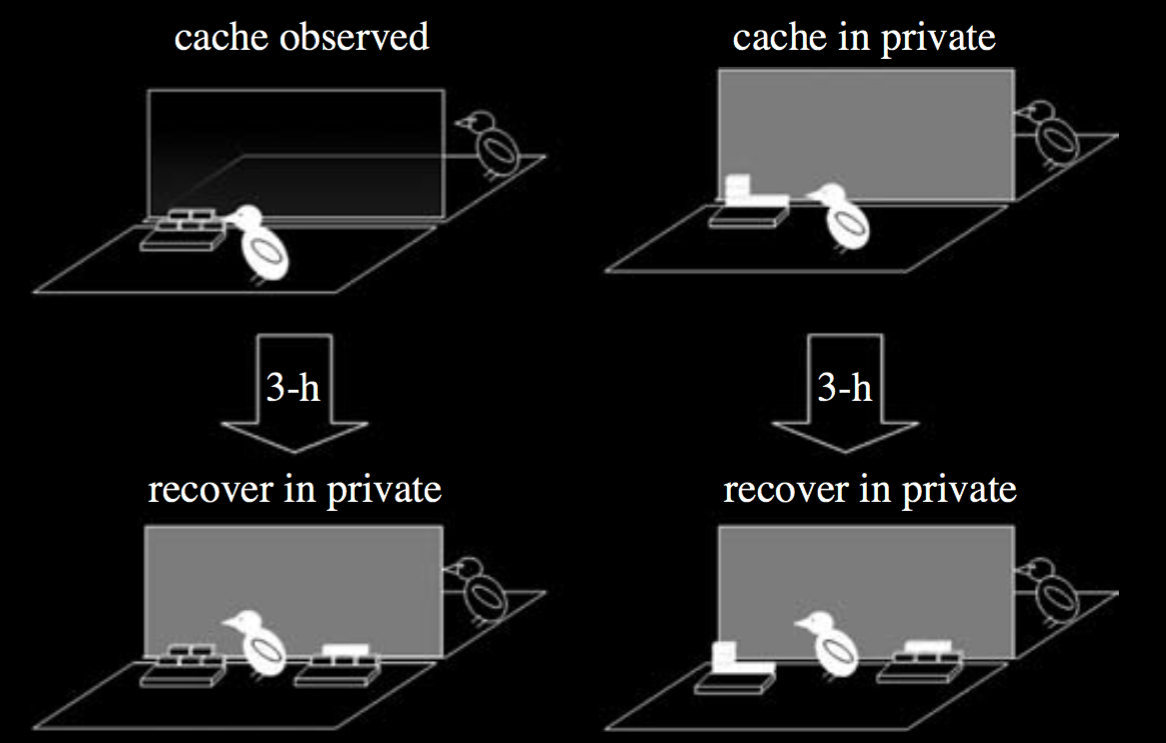

Clayton et al, 2007 figure 11

Clayton et al, 2007 figure 12

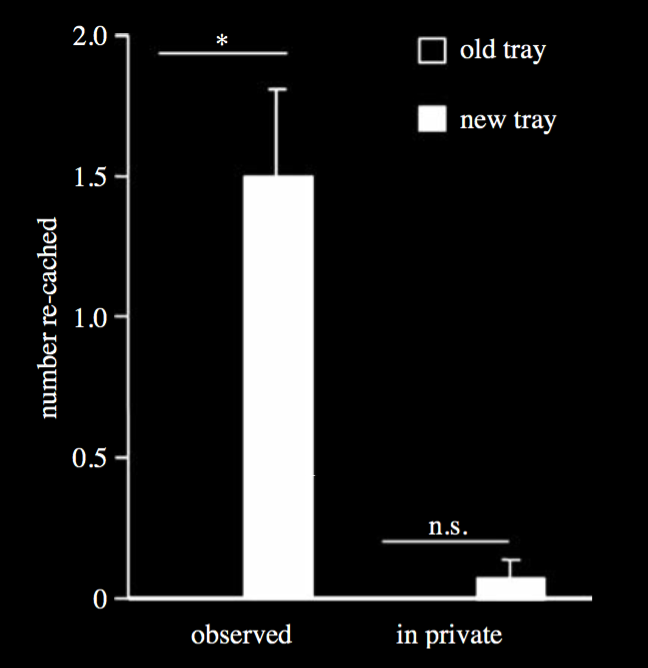

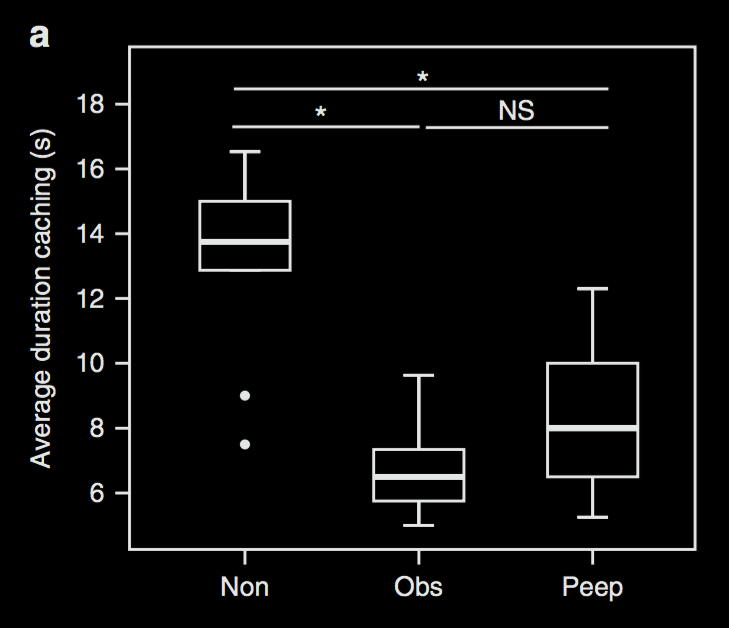

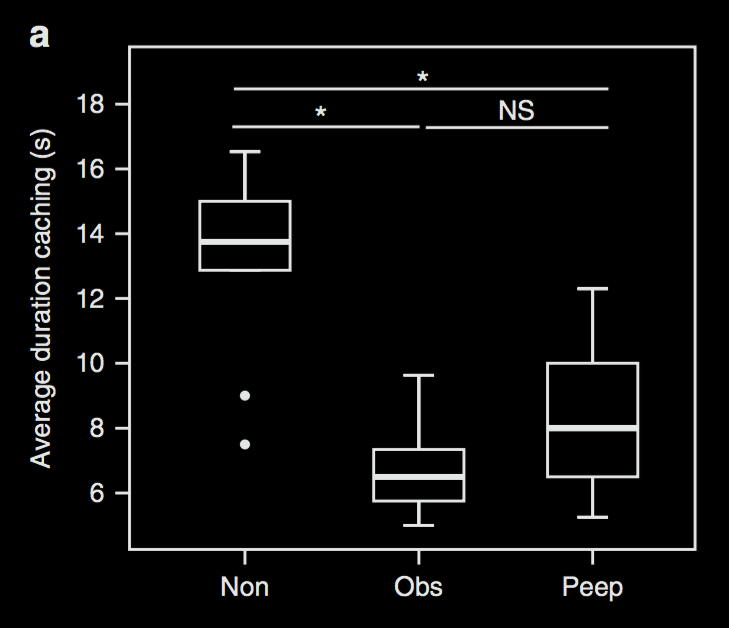

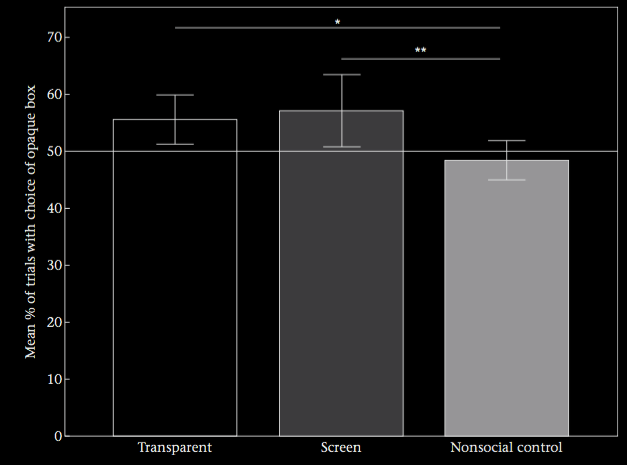

Bugnyar et al, 2016 figure 1

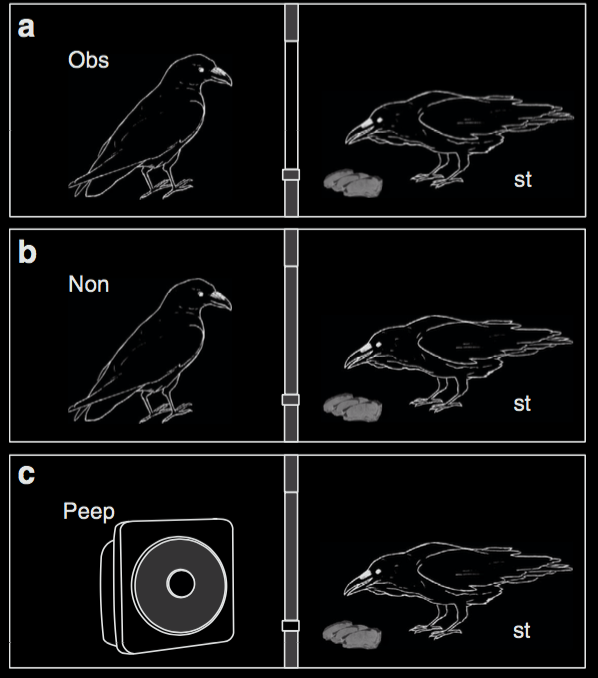

Bugnyar et al, 2016 figure 2a

Bugnyar et al, 2016 figure 1

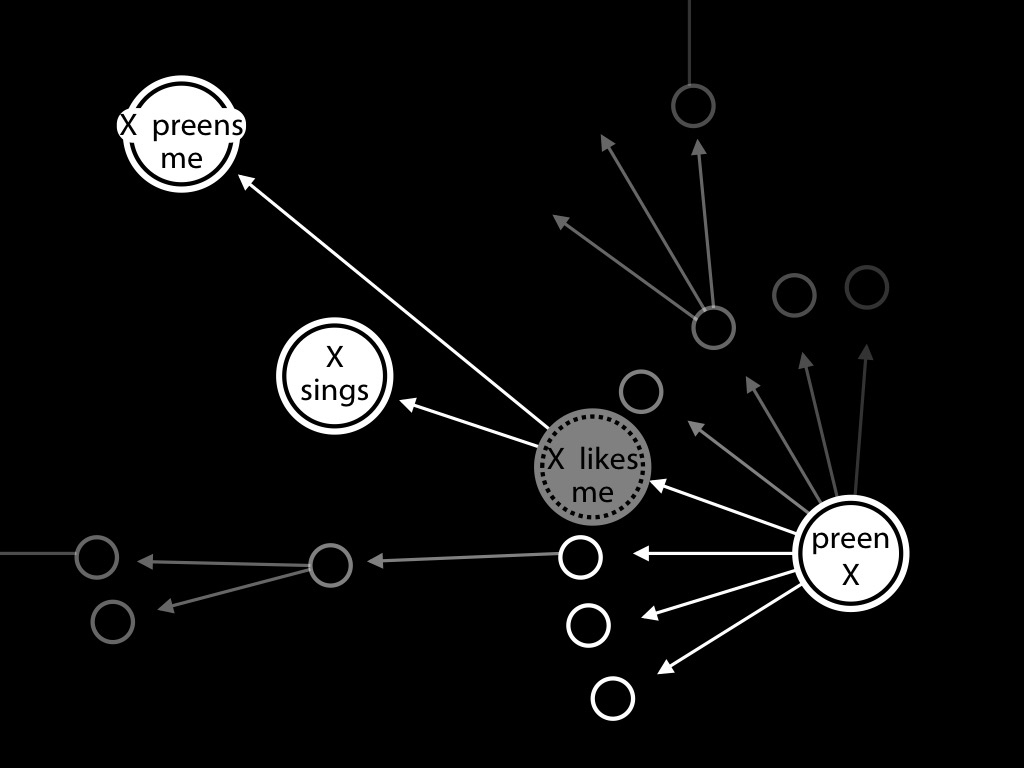

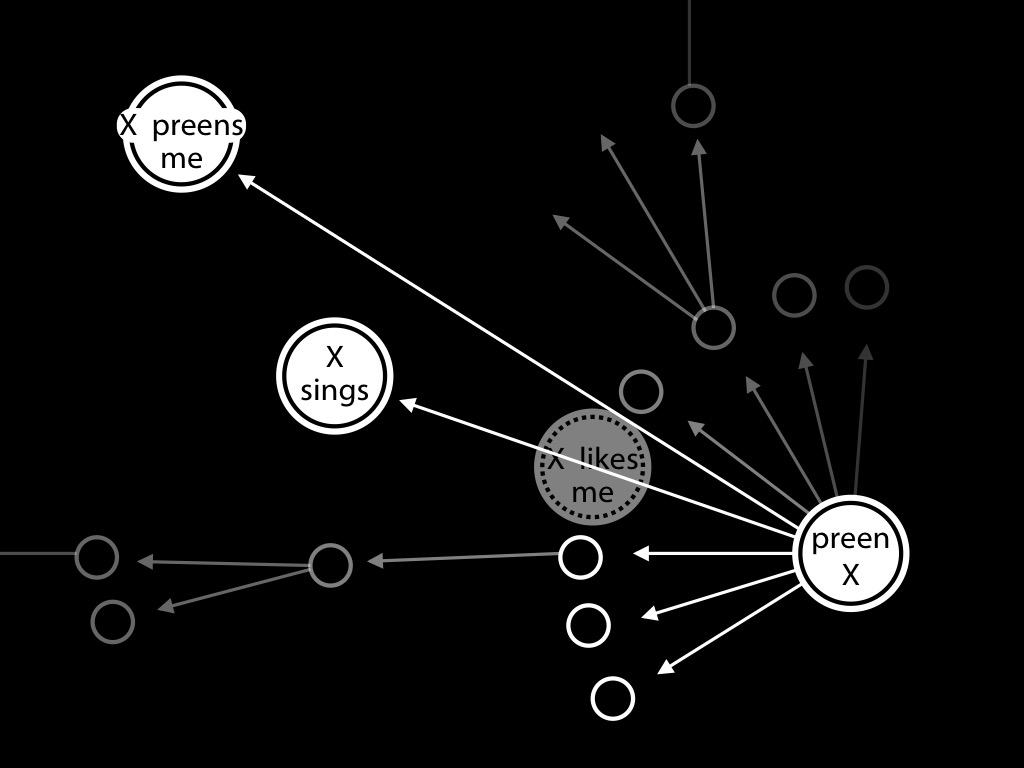

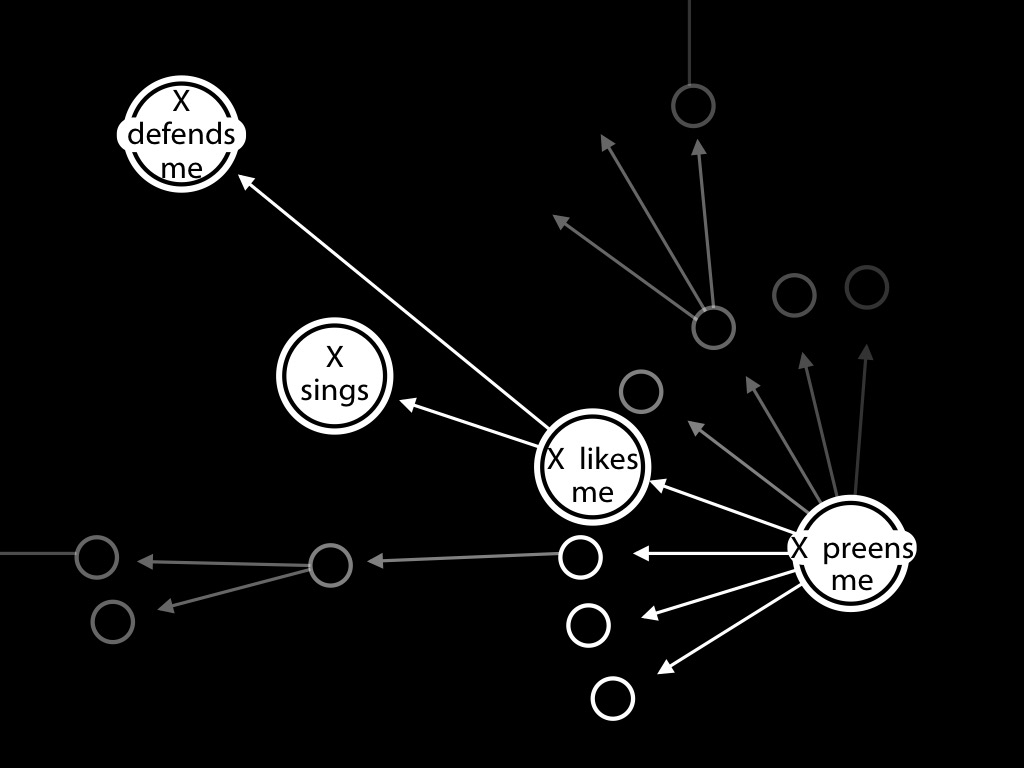

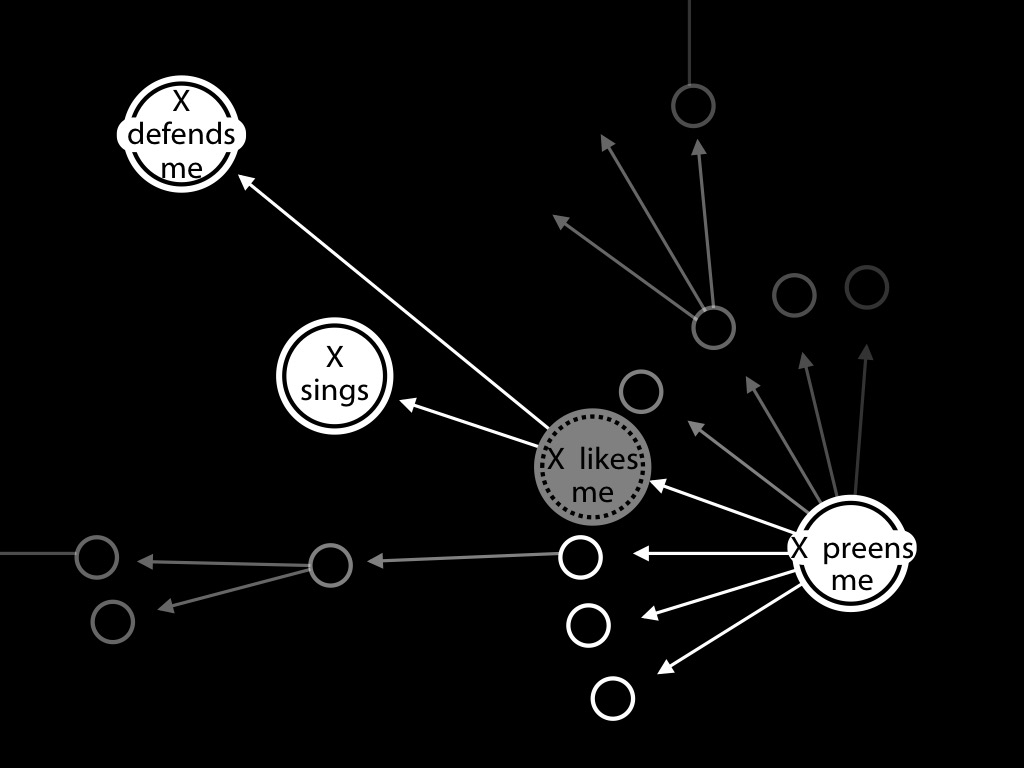

Tracking vs Representing Mental States

Premack & Woodruff, 1978 p. 515

Which action an ape predicts another will perform

depends to some extent on

what the other sees, knows or believes.

theory of mind abilities

vs

theory of mind cognition

Tracking mental states does not imply representing them.

Mindreading is the ability to track others’ mental states

Theory of mind ability

Mindreading is the ability to represent others’ mental states

Theory of mind cognition

Krupenye et al, 2016

(Premack & Woodruff 1978: 515)

Which action an ape predicts another will perform

depends to some extent on

what the other sees, knows or believes.

Theory of mind abilities are widespread

18-month-olds point to inform, and predict actions based on false beliefs.

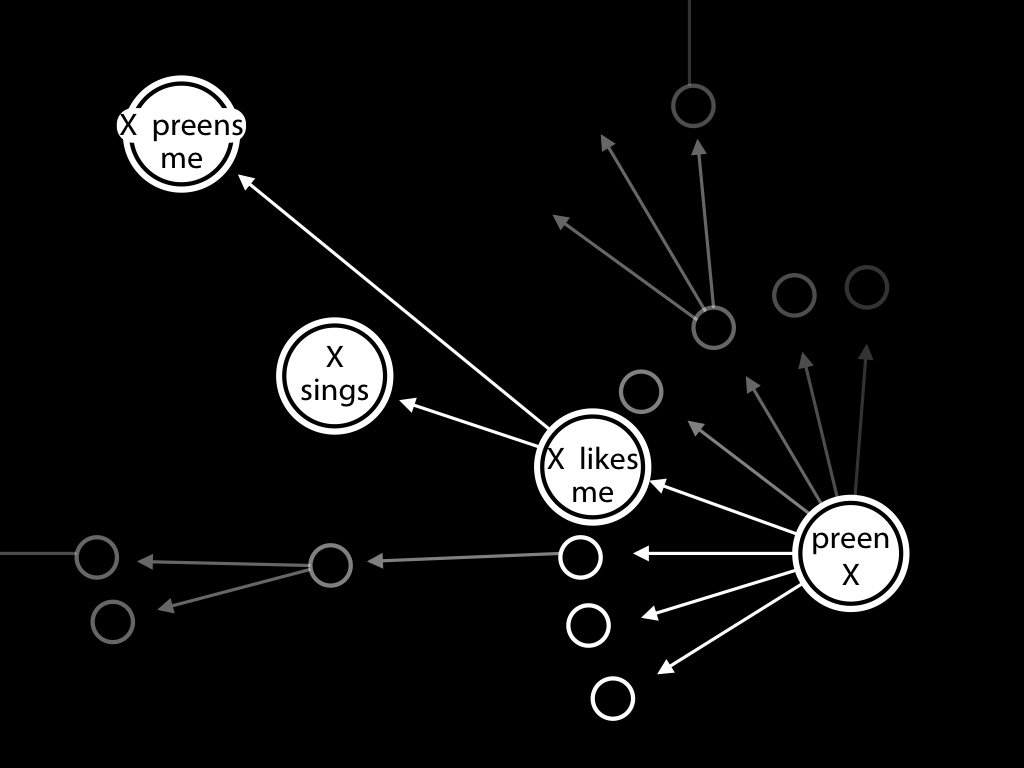

Scrub-jays selectively re-cache their food in ways that deprive competitors of knowledge of its location.

Chimpanzees conceal their approach from a competitor’s view, and act in ways that are optimal given what another has seen.

NSS

The Question, version 0.1

tracking vs representing mental states

What do

human infants, nonhuman great ape adults or adult scrub-jays

reason about, or represent,

that enables them,

within limits,

to track others’ perceptions, knowledge, beliefs and other propositional attitudes?

-- Is it mental states?

What could make others’ mental states intelligible (or identifiable) to a chimpanzee, infant or scrub-jab?

-- Or only behaviours?

What could make others’ behaviours intelligible (or identifiable) to a chimpanzee, infant or scrub-jab?

The Behaviour Reading Demon

Hare et al (2001, figure 1)

‘an intelligent chimpanzee could simply use the behavioural abstraction […]: ‘Joe was present and oriented; he will probably go after the food. Mary was not present; she probably won’t.’’

Povinelli and Vonk (2003)

For any food (x) and agent (y), if any of the following do not hold:

(i) the agent (y) was present when the food (x) was placed,

(ii) the agent (y) was oriented to the food (x) when it was placed,

and:

(iii) the agent (y) can go after the food (x)

then probably not:

(iv) the agent (y) will go after the food (x).

Also, if all of (i)–(iii) do hold, then probably (iv).

‘Don't go after food if a dominant who is present has oriented towards it’

Penn and Povinelli (2007, 735)

The ‘Logical Problem’ ...

‘since mental state attribution in [nonhuman] animals will (if extant) be based on observable features of other agents’ behaviors and environment ... every mindreading hypothesis has ... a complementary behavior-reading hypothesis.

Lurz (2011, 26)

5 min

Nonhuman Mindreading: The Logical Problem

The logical problem

‘since mental state attribution in [nonhuman] animals will (if extant) be based on observable features of other agents’ behaviors and environment ... every mindreading hypothesis has ... a complementary behavior-reading hypothesis.

‘Such a hypothesis proposes that the animal relies upon certain behavioral/environmental cues to predict another agent’s behavior

Lurz (2011, 26)

1. Ascriptions of mental states ultimately depend on information about behaviours

- information triggering ascription

- predictions derived from ascription

2. In principle, anything you can predict by ascribing a mental state you could have predicted just by tracking behaviours.

Is there an experimental solution to the ‘Logical Problem’?

Lurz and Karchun (2011) : yes ...

‘Behavior-reading animals can appeal only to ... reality-based, mind-independent facts, such as facts about agents’ past behavior or their current line of gaze to objects in the environment.

‘Mindreading animals, in contrast, can appeal to the subjective ways environmental objects perceptually appear to agents to predict their behavior.’

Lurz and Krachun (2011, p. 469)

Lurz & Krachun 2011, figure 1

The logical problem

1. Ascriptions of mental states ultimately depend on information about behaviours and objects

- information triggering ascription

- predictions derived from ascription

- ways objects appear

2. In principle, anything you can predict by ascribing a mental state you could have predicted just by tracking behaviours.

‘Behavior-reading animals can appeal only to ... reality-based, mind-independent facts, such as facts about agents’ past behavior or their current line of gaze to objects in the environment.

‘Mindreading animals, in contrast, can appeal to the subjective ways environmental objects perceptually appear to agents to predict their behavior.’

Lurz and Krachun (2011, p. 469)

But: objects have appearance properties, and provide affordances, which are independent of any particular mind.

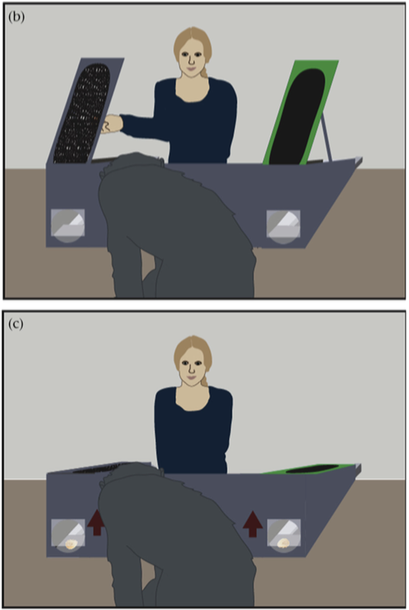

Do goggles solve the ‘Logical Problem’?

‘“self-informed” belief induction variables [... are those] that, if the participant is capable of mentalizing, he or she knows only through extrapolation from her own experience to be indicative of what an agent can or cannot see and, therefore, does or does not believe’

Heyes, 2014 p. 139

Karg et al, 2015 figure 4 (Experiment 2)

Karg et al, 2015 figure 5 (Experiment 2)

Karg et al, 2015 figure 5 (Experiment 2)

Do goggles solve the ‘Logical Problem’?

‘“self-informed” belief induction variables [... are those] that, if the participant is capable of mentalizing, he or she knows only through extrapolation from her own experience to be indicative of what an agent can or cannot see and, therefore, does or does not believe’

Heyes, 2014 p. 139

conclusion

Mindreading defined (twice: tracking vs representing)

Evidence for nonhuman mindreading

A question (to be revisited and revised)

The ‘Logical Problem’

Is the ‘Logical Problem’ a logical problem?

What can we conclude so far?

The standard question:

Do nonhuman animals represent mental states or only behaviours?

Obstacle:

The ‘logical problem’ (Lurz 2011)

What could make others’ behaviours intelligible to nonhuman animals?

-- the teleological stance

What could make others’ mental states intelligible to nonhuman animals?

-- minimal theory of mind