Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

Premack & Woodruff, 1978 p. 515

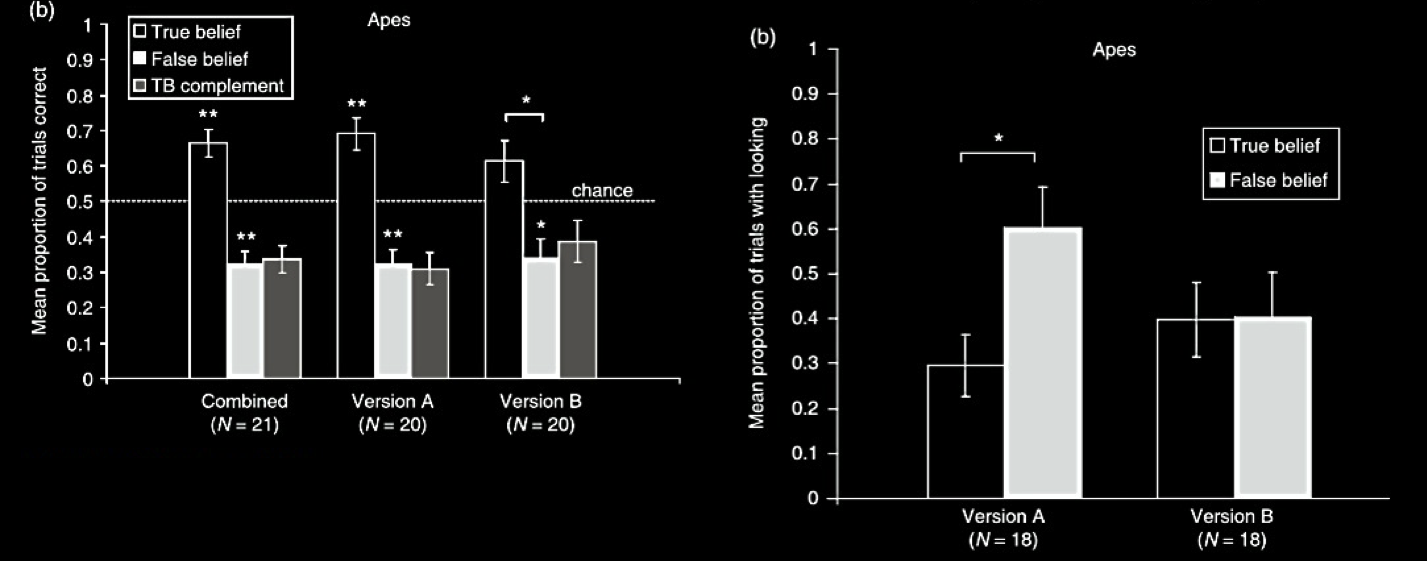

Apes : anticipatory gaze depends on protagonists’ false belief (Krupnye et al, 2017)

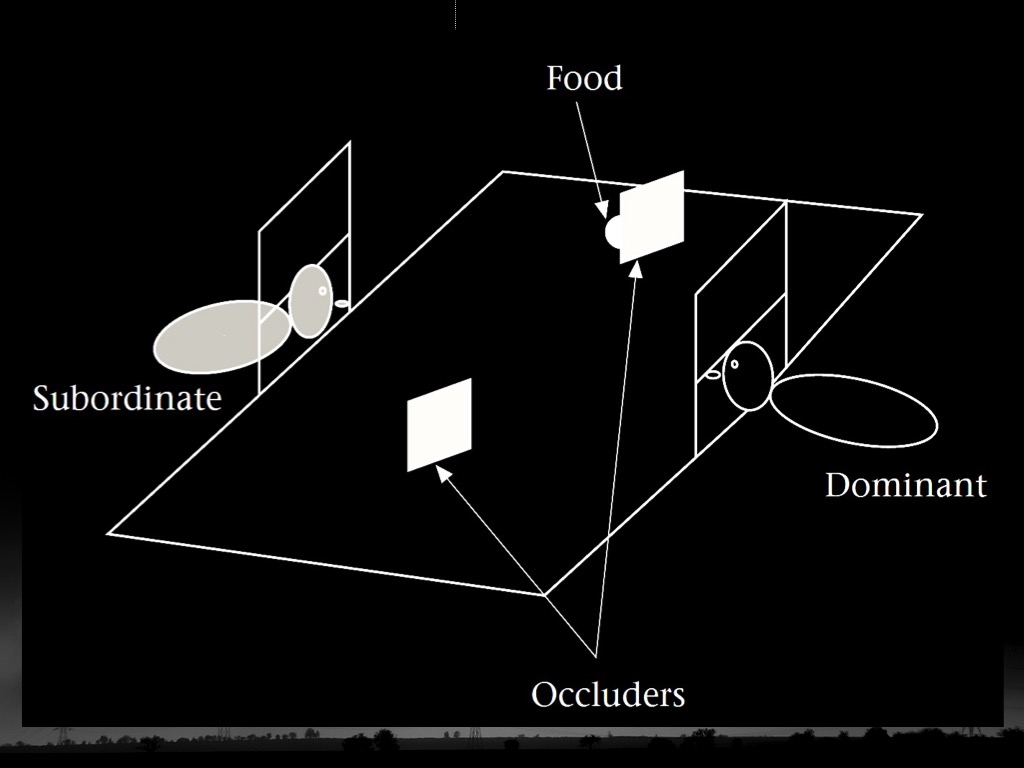

Apes, goals : food avoidance differs depending on competitors’ (mis)information (Hare et al, 2001; Kaminski et al, 2006)

Apes : avoid being seen or making sounds when taking food (Melis et al, 2006)

Apes : will exploit facts about what others can see in mirrors or through screens (Karg et al, 2015; Lurz et al, 2018)

Corvids : caching differs depending what others can, or have, seen (Clayton et al, 2007; Bugnyar et al, 2016)

Dogs : responses to requests depend on what requester can see (Kaminski et al, 2009)

Ringtail lemurs, common marmosets : food avoidance depending on competitors’ line of sight (Sandel et al, 2011; Burkart & Heschl, 2007)

Premack & Woodruff, 1978 p. 515

So : nonhumans have theories of mind ?

tracking vs representing mental states

What is observed: that nonhumans track others’ mental states.

Tracking mental states does not require representing them.

So:

How can we draw conclusions about what nonhumans represent?

behaviour-reading demon -> we can’t

If your objection involves a

behaviour-reading demon

(or any kind of demon),

then it is probably

a merely sceptical issue

rather than a scientific objection.

Three Responses to the Logical Problem

The logical problem

‘since mental state attribution in [nonhuman] animals will (if extant) be based on observable features of other agents’ behaviors and environment ... every mindreading hypothesis has ... a complementary behavior-reading hypothesis.

‘Such a hypothesis proposes that the animal relies upon certain behavioral/environmental cues to predict another agent’s behavior

Lurz (2011, 26)

Do any nonhuman animals ever represent others’ mental states?

1. Representing others’ mental states depends on making a transition from behaviour to mental state.

2. For any hypothesis about mindreading there is a ‘complementary hypothesis’ about behaviour reading

3. The two hypotheses generate the same predictions.

4. No experiment can distinguish between them.

1. It is not a logical problem at all, but one that should be resolved by better experimental methods.

- we lack currently evidence for nonhuman mindreading

(except maybe from ‘goggles’ and ‘mirror’ experiments)

2. It is a merely logical problem (so a form of sceptical hypothesis).

- we already have evidence for nonhuman mindreading

3. It is an illusory problem, caused by a theoretical mistake.

- we’re thinking about the issue in the wrong way

‘Comparative psychologists test for mindreading in non-human animals by determining whether theydetect the presence and absence of particular cognitive states in a wide variety of circumstances.

Halina, 2015 p. 487

The ‘Logical Problem’ is a sceptical problem

∴

the evidence supports mindreading?

Apes : anticipatory gaze depends on protagonists’ false belief (Krupnye et al, 2017)

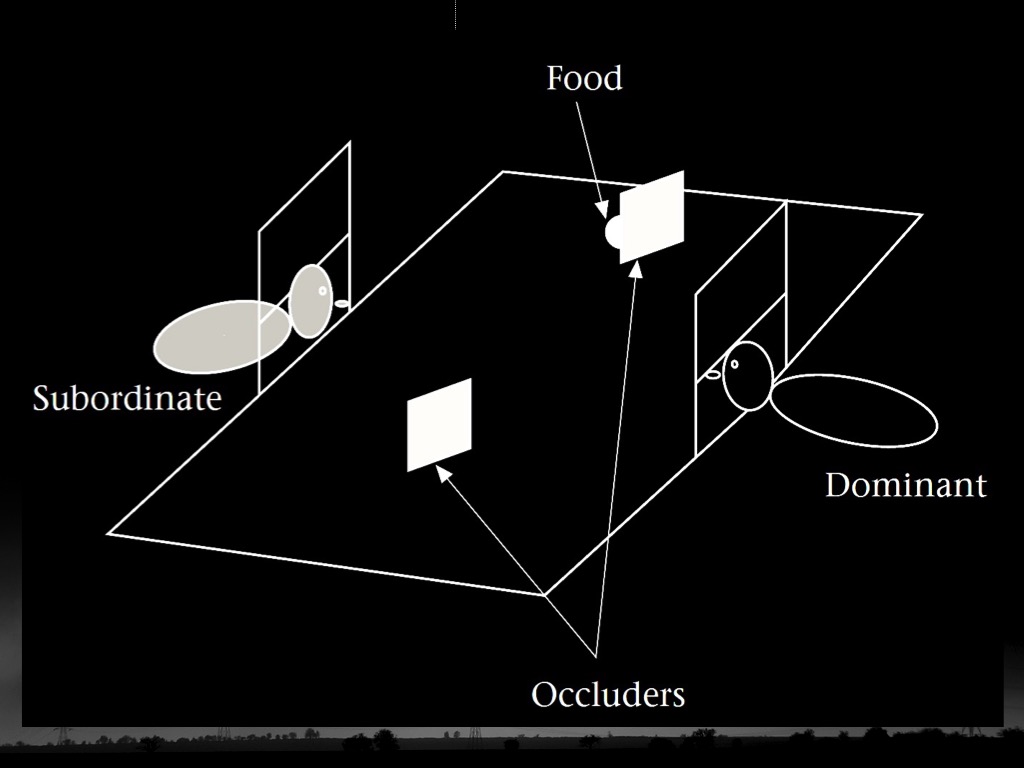

Apes, goals : food avoidance differs depending on competitors’ (mis)information (Hare et al, 2001; Kaminski et al, 2006)

Apes : avoid being seen or making sounds when taking food (Melis et al, 2006)

Apes : will exploit facts about what others can see in mirrors or through screens (Karg et al, 2015; Lurz et al, 2018)

Corvids : caching differs depending what others can, or have, seen (Clayton et al, 2007; Bugnyar et al, 2016)

Dogs : responses to requests depend on what requester can see (Kaminski et al, 2009)

Ringtail lemurs, common marmosets : food avoidance depending on competitors’ line of sight (Sandel et al, 2011; Burkart & Heschl, 2007)

‘Comparative psychologists test for mindreading in non-human animals by determining whether they detect the presence and absence of particular cognitive states in a wide variety of circumstances.

Halina, 2015 p. 487

Requirement:

We can distinguish,

both within an individual

and between individuals,

mindreading which involves representing mental states

from

mindreading which does not.

1. It is not a logical problem at all, but one that should be resolved by better experimental methods.

- we lack currently evidence for nonhuman mindreading

(except maybe from ‘goggles’ and ‘mirror’ experiments)

2. It is a merely logical problem (so a form of sceptical hypothesis).

- we already have evidence for nonhuman mindreading

3. It is an illusory problem, caused by a theoretical mistake.

- we’re thinking about the issue in the wrong way

‘Nonhumans represent mental states’ is not a hypothesis

‘chimpanzees understand … intentions … perception and knowledge’

‘chimpanzees probably do not understand others in terms of a fully human-like belief–desire psychology’

Call & Tomasello (2008, 191)

‘the core theoretical problem in ... animal mindreading is that ... the conception of mindreading that dominates the field ... is too underspecified to allow effective communication among researchers’

Heyes (2015, 321)

‘Nonhumans represent behaviours only’

is also not a hypothesis

‘an intelligent chimpanzee could simply use the behavioural abstraction […]: ‘Joe was present and oriented; he will probably go after the food. Mary was not present; she probably won’t.’’

Povinelli and Vonk (2003)

‘because behavioural strategies are so unconstrained ...it is very difficult indeed, perhaps impossible, to design experiments that could show that animals are mindreading rather than behaviour reading.’

Heyes (2015, 322)

1. It is not a logical problem at all, but one that should be resolved by better experimental methods.

- we lack currently evidence for nonhuman mindreading

(except maybe from ‘goggles’ and ‘mirror’ experiments)

2. It is a merely logical problem (so a form of sceptical hypothesis).

- we already have evidence for nonhuman mindreading

3. It is an illusory problem, caused by a theoretical mistake.

- we’re thinking about the issue in the wrong way

tracking vs representing mental states

What is observed: that nonhumans track others’ mental states.

Tracking mental states does not require representing them.

So:

How can we draw conclusions about what nonhumans represent?

What does the ‘Logical Problem’ show?

Lurz, Krachun et al

The ‘Logical Problem’ can be overcome with better experimental design.

Halina, Heyes (?), et al

The ‘Logical Problem’ is a logical problem.

Its existence shows that these are the wrong experiments whereas those are the right ones.

Its existence shows that being able to track mental states does not logically entail being able to to represent mental states.

The standard question:

Do nonhuman animals represent mental states or only behaviours?

Obstacle:

The ‘logical problem’ (Lurz 2011)

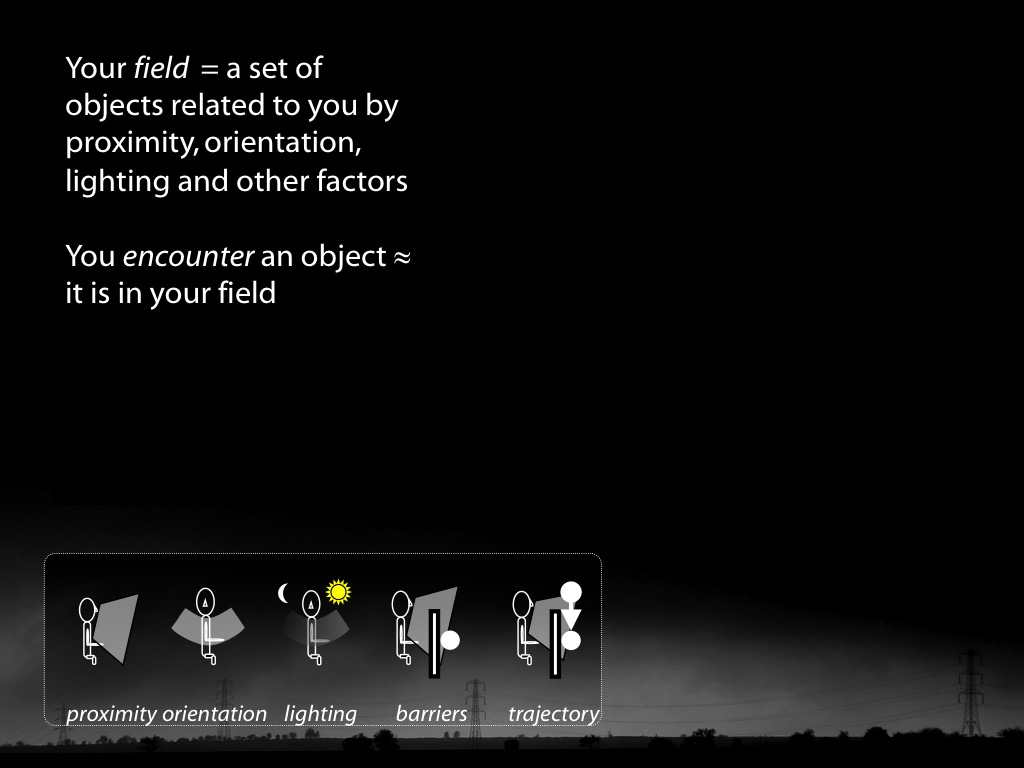

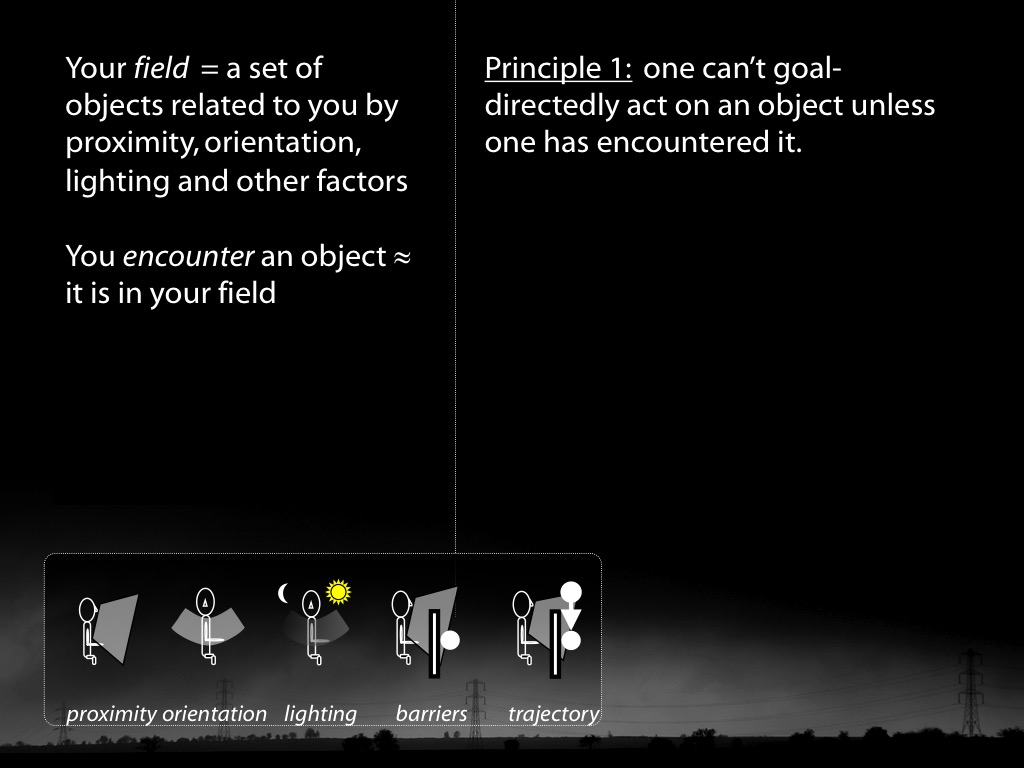

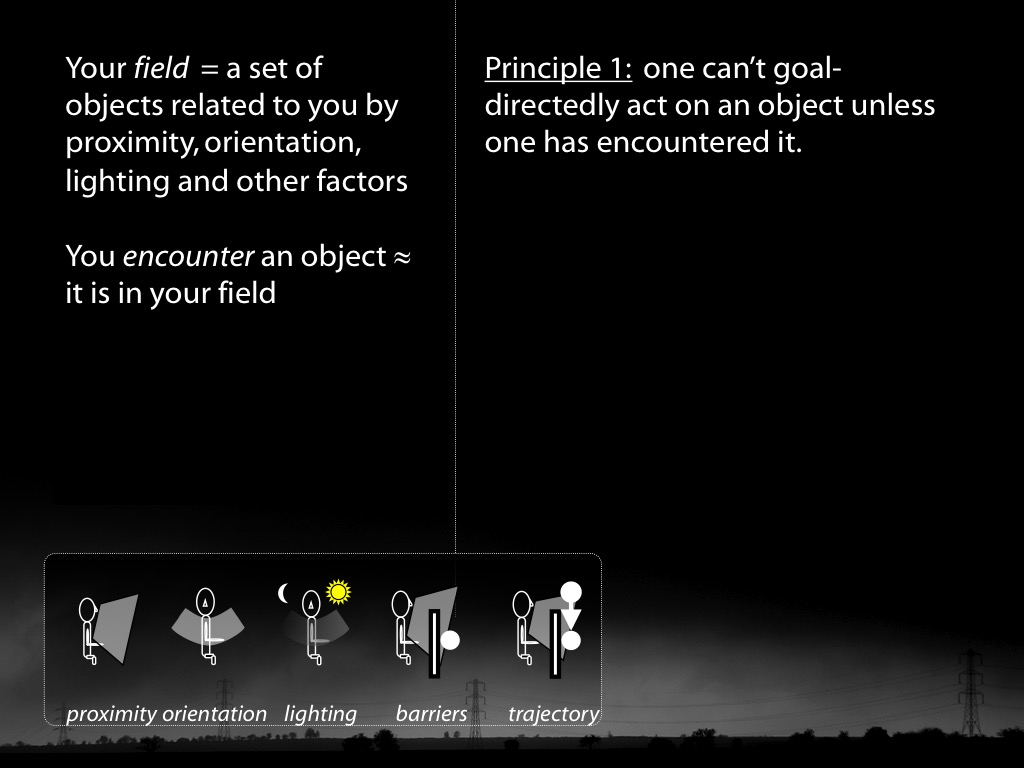

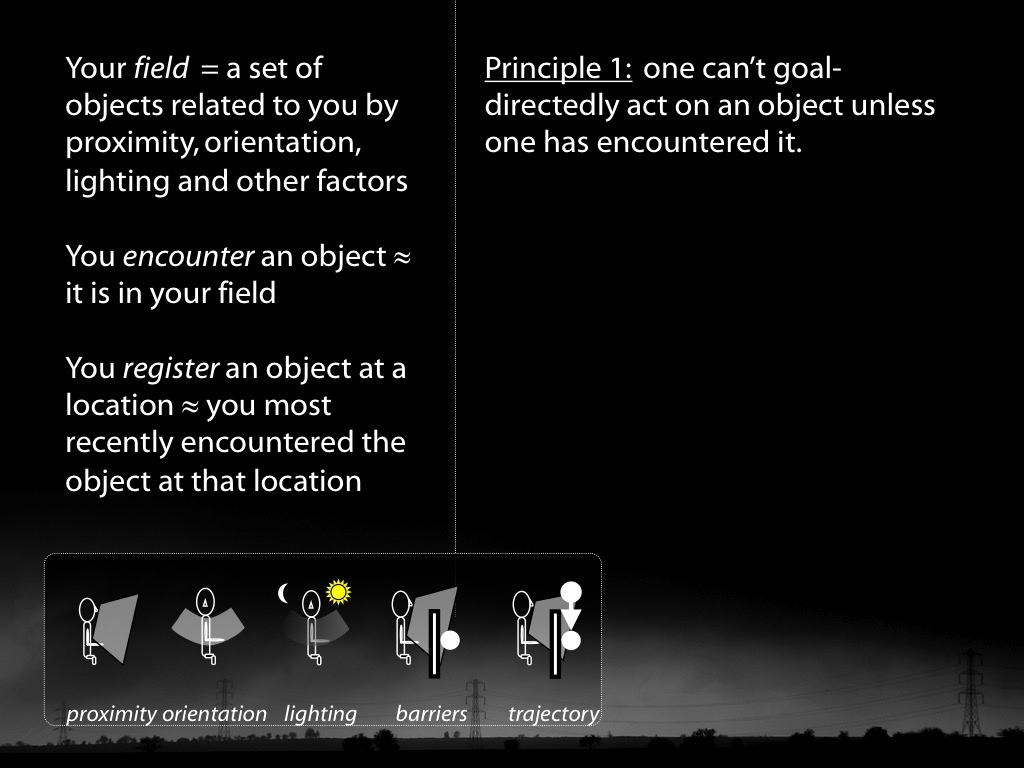

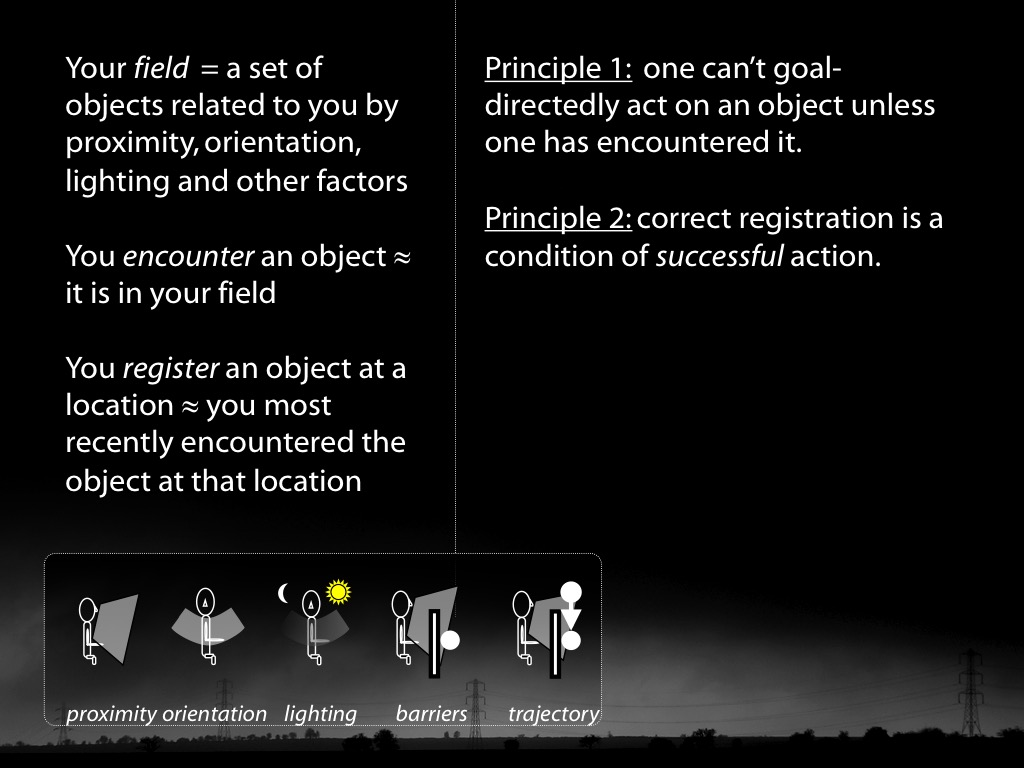

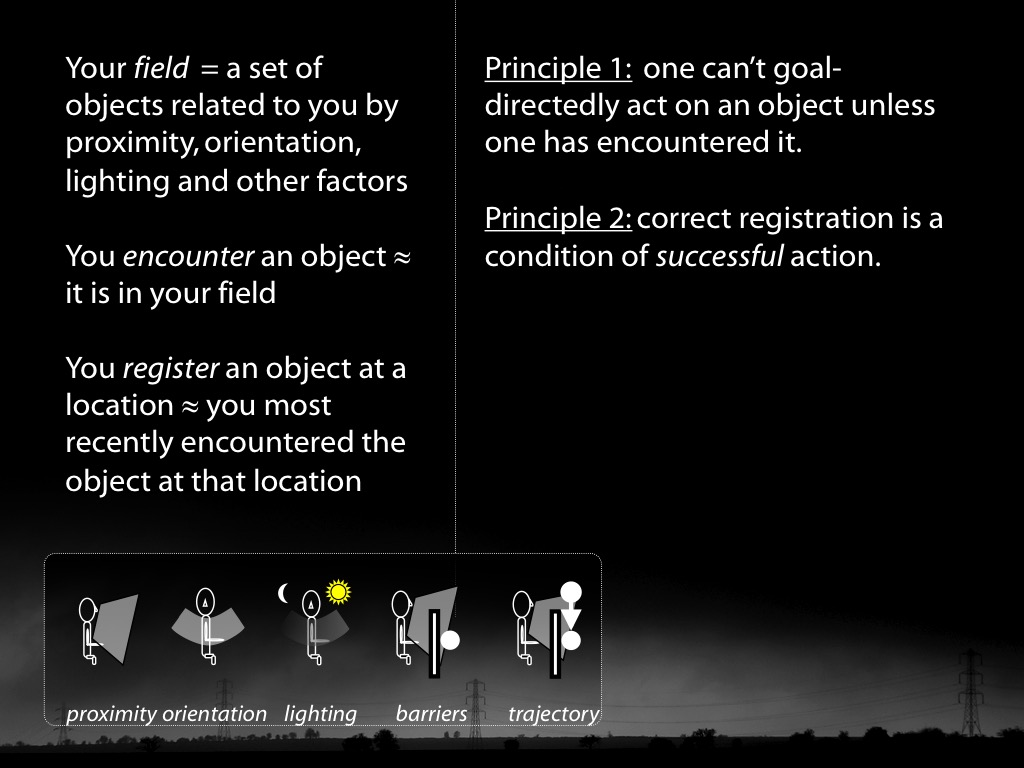

What could make others’ behaviours intelligible to nonhuman animals?

-- the teleological stance

What could make others’ mental states intelligible to nonhuman animals?

-- minimal theory of mind

Logical Problem: Summary

1. statement of what the problem is

2. three responses to the Logical Problem

My proposal :

3. it is a logical problem, not an experimental issue ...

4. ... but recognising this leaves open the question of what nonhumans represent.

A Different Approach

What do infants, chimps and scrub-jays reason about, or represent, that enables them, within limits, to track others’ perceptions, knowledge, beliefs and other propositional attitudes?

-- Is it mental states?

-- Or only behaviours?

What models of minds and actions, and of behaviours,

and what kinds of processes,

underpin mental state tracking in different animals?

Requirement 3: Predict Dissociations

‘the present evidence may constitute an implicit understanding of belief’

Krupenye et al, 2016 p. 113

Krachun et al, 2009 figure 2 (part)

Requirement 3: Predict Dissociations

Which tasks should chimps and jays pass and fail?

Requirement 2: Models

‘chimpanzees understand … intentions … perception and knowledge,

‘chimpanzees probably do not understand others in terms of a fully human-like belief–desire psychology’

Call & Tomasello, 2008 p.~191

Requirement 2: Models

How do chimps or jays variously model minds and actions?

‘Nonhumans represent mental states’ is not a hypothesis

‘the core theoretical problem in contemporary research on animal mindreading is that the bar—the conception of mindreading that dominates the field—is too low, or more specifically, that it is too underspecified to allow effective communication among researchers, and reliable identification of evolutionary precursors of human mindreading through observation and experiment’

Heyes, 2014, p. 318

Requirement 2: Models

Requirement 1: Diversity in Strategies

Requirement:

We can distinguish,

both within an individual

and between individuals,

mindreading which involves representing mental states

from

mindreading which does not.

Three Requirements

1. Diversity in Strategies

2. Models

3. Predict Dissociations

What models of minds and actions, and of behaviours,

and what kinds of processes,

underpin mental state tracking in different animals?

Way forward:

1. Construct a theory of behaviour reading

2. Construct a theory of mindreading

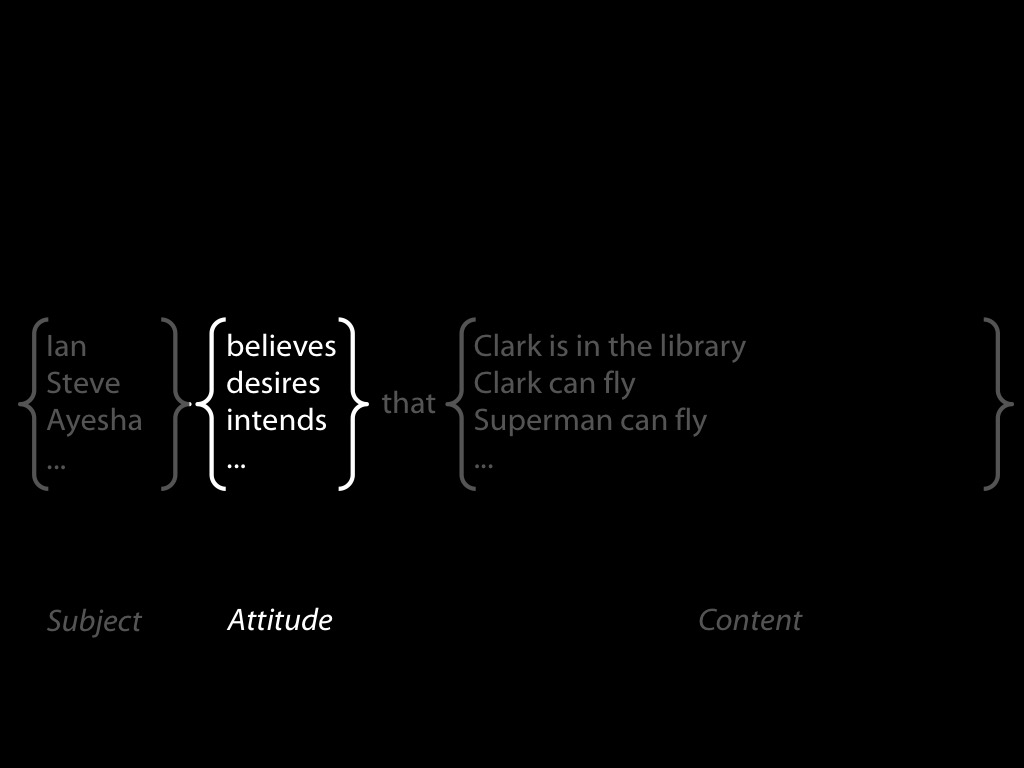

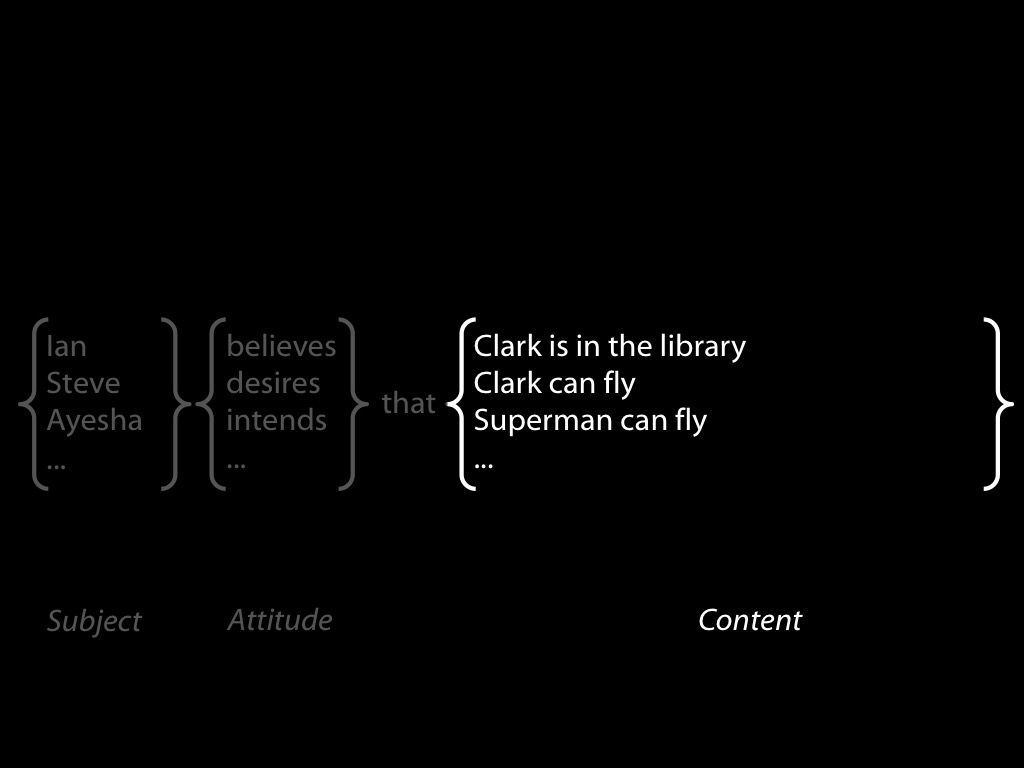

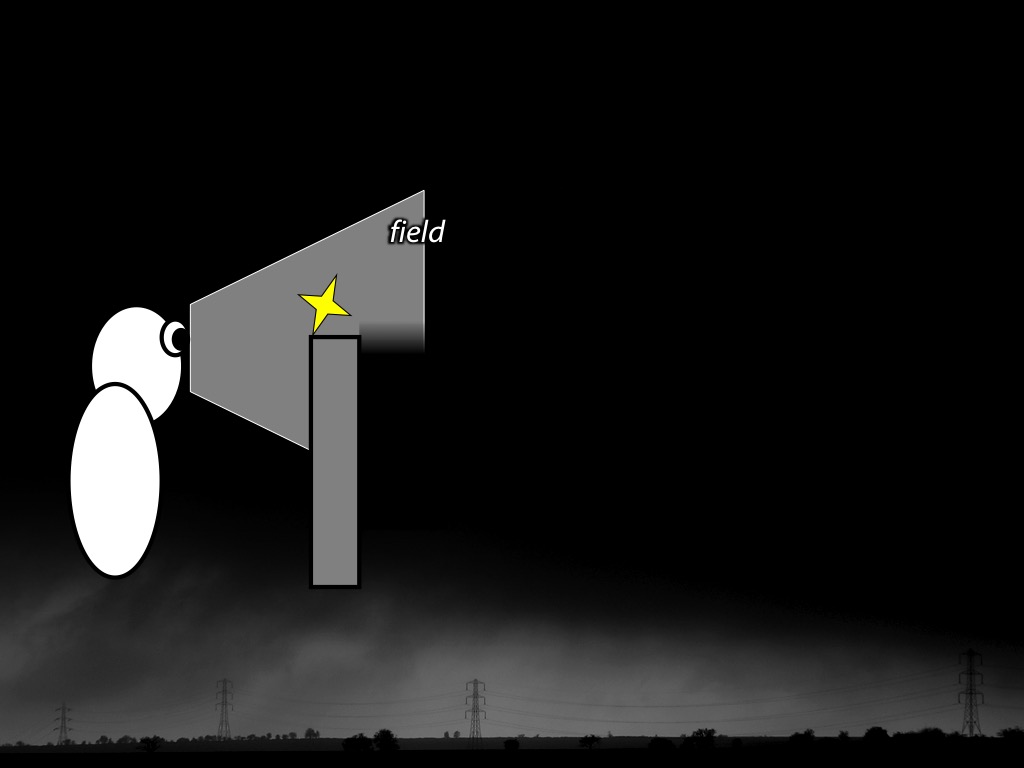

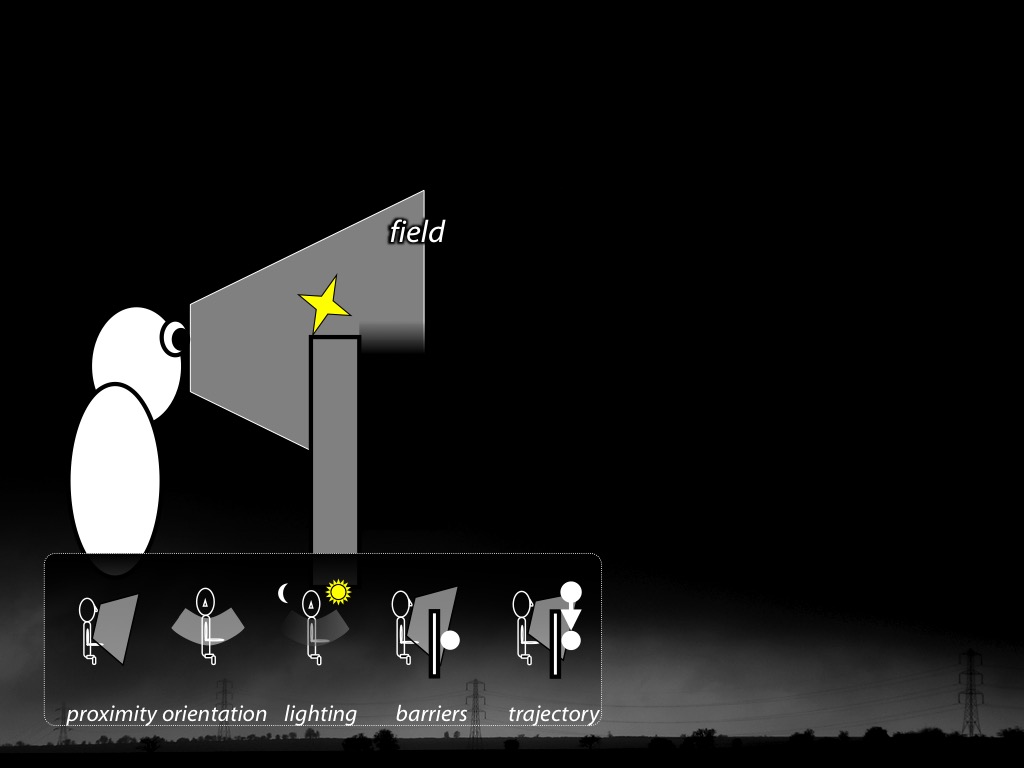

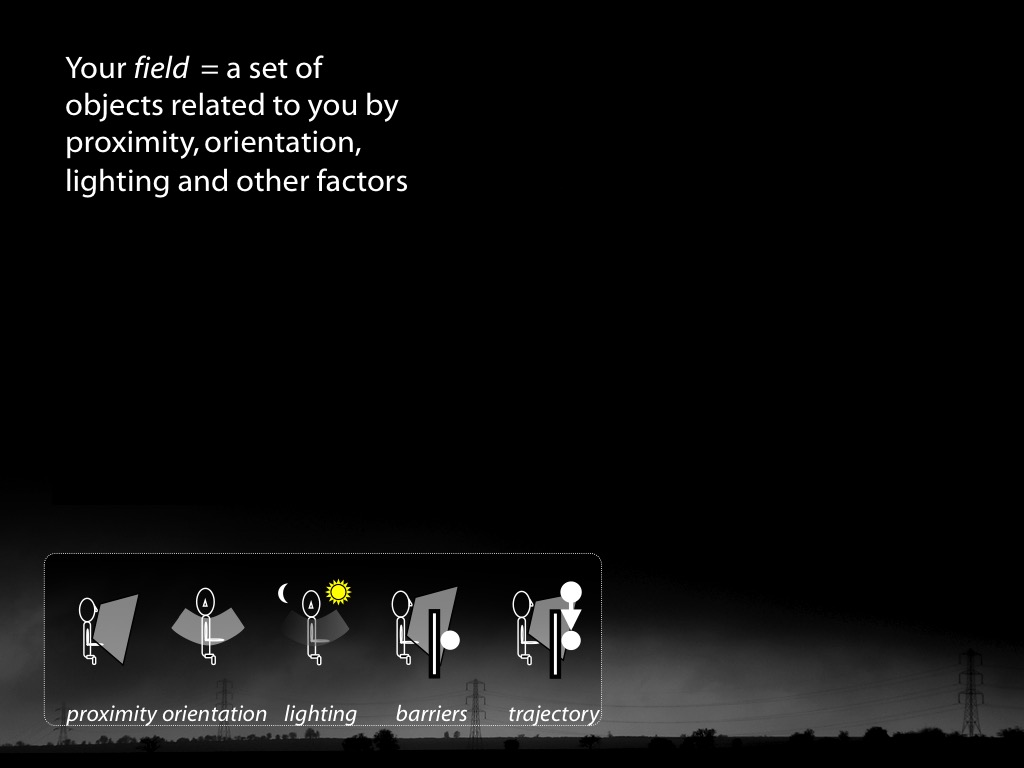

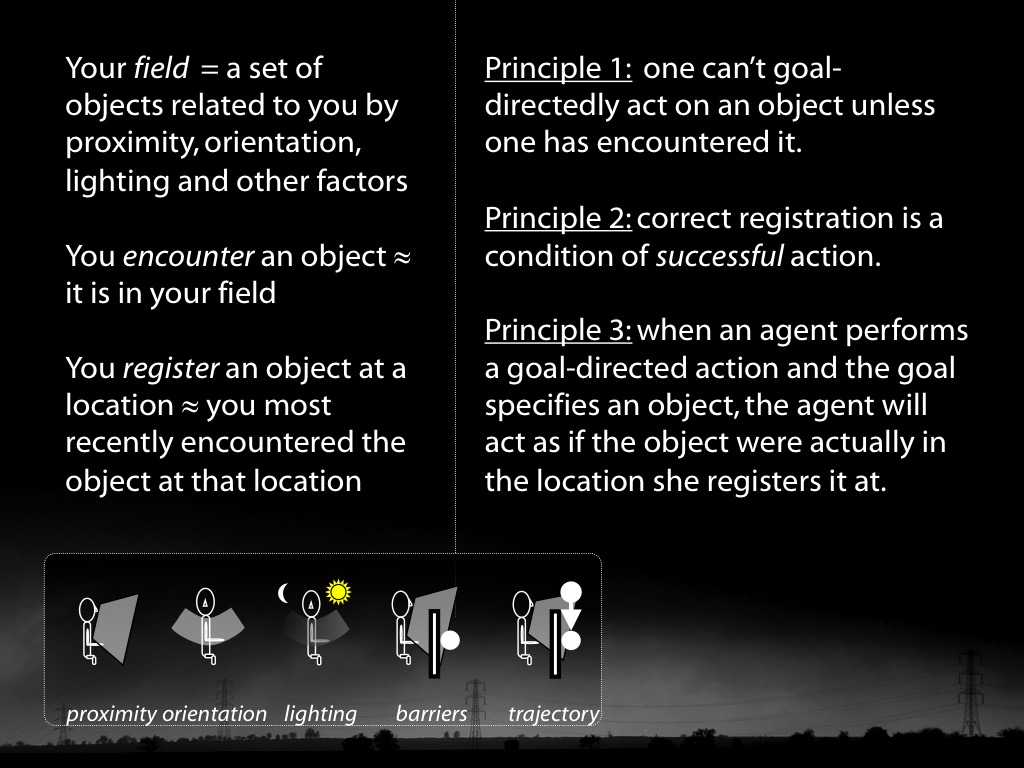

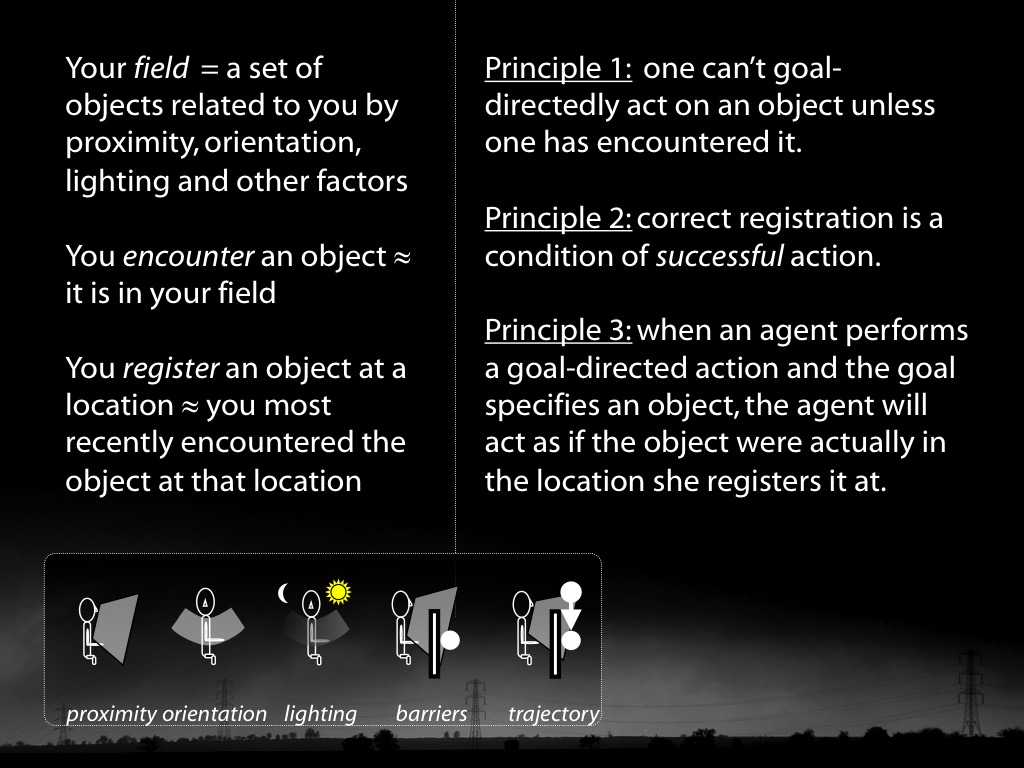

Minimal Theory of Mind

What models of minds and actions

underpin mental state tracking in different animals?

Krupenye et al, 2017 (movie 1)

Anything unclear?

Objections?

the

dogma

of mindreading

Fact:

Minimal theory of mind specifics a model of minds and actions,

one which could in principle explain chimps, jays or other animals track mental states.

Conjecture:

Nonhuman mindreading processes are characterised by a minimal model of minds and actions.

What models of minds and actions underpin mental state tracking in chimpanzees, scrub jays and other animals?

Does the chimpanzee have a theory of mind?

Could a system characterised by minimal theory of mind explain chimpanzee theory of mind abilities?

- yes

But does it?

conclusion

The ‘Logical Problem’ defined

Three responses to the ‘Logical Problem’ examined

How can we draw conclusions about what nonhumans represent from evidence about tracking?

Proposal : identify models of minds and actions, and of behaviours, which could underpin mental state tracking in different animals.

The Dogma of Mindreading rejected

Minimal Theory of Mind constructed